This is the sequel of my previous article explaining the implementation details of the signals and slots. In the Part 1, we have seen the general principle and how it works with the old syntax. In this blog post, we will see the implementation details behind the new function pointer based syntax in Qt5.

New Syntax in Qt5

The new syntax looks like this:

QObject::connect(&a, &Counter::valueChanged,&b, &Counter::setValue);

Why the new syntax?

I already explained the advantages of the new syntax in adedicated blog entry. To summarize, the new syntax allows compile-time checking of the signals and slots. It also allows automatic conversion of the arguments if they do not have the same types. As a bonus, it enables the support for lambda expressions.

New overloads

There was only a few changes required to make that possible.

The main idea is to have new overloads to QObject::connect which take the pointers

to functions as arguments instead of char*

There are three new static overloads of QObject::connect: (not actual code)

QObject::connect(const QObject *sender, PointerToMemberFunctionsignal, const QObject *receiver, PointerToMemberFunctionslot, Qt::ConnectionType type)QObject::connect(const QObject *sender, PointerToMemberFunctionsignal,PointerToFunctionmethod)

QObject::connect(const QObject *sender, PointerToMemberFunctionsignal,Functormethod)

The first one is the one that is much closer to the old syntax: you connect a signal from the sender to a slot in a receiver object. The two other overloads are connecting a signal to a static function or a functor object without a receiver.

They are very similar and we will only analyze the first one in this article.

Pointer to Member Functions

Before continuing my explanation, I would like to open a parenthesis to talk a bit about pointers to member functions.

Here is a simple sample code that declares a pointer to member function and calls it.

void (QPoint::*myFunctionPtr)(int); // Declares myFunctionPtr as a pointer to// a member function returning void and // taking (int) as parametermyFunctionPtr = &QPoint::setX;QPointp;QPoint *pp = &p; (p.*myFunctionPtr)(5); // calls p.setX(5); (pp->*myFunctionPtr)(5); // calls pp->setX(5);

Pointers to member and pointers to member functions

are usually part of the subset of C++ that is not much used and thus lesser known.

The good news is that you still do not really need to know much about them

to use Qt and its new syntax. All you need to remember is to put the& before the name of the signal in your connect call.

But you will not need to cope with the ::*, .*

or ->* cryptic operators.

These cryptic operators allow you to declare a pointer to a member or access it. The type of such pointers includes the return type, the class which owns the member, the types of each argument and the const-ness of the function.

You cannot really convert pointer to member functions to anything and in particular not tovoid* because they have a different sizeof.

If the function varies slightly in signature, you cannot convert from one to the other.

For example, even converting from void (MyClass::*)(int) const tovoid (MyClass::*)(int) is not allowed.

(You could do it with reinterpret_cast; but that would be an undefined behaviour if you call

them, according to the standard)

Pointer to member functions are not just like normal function pointers.

A normal function pointer is just a normal pointer the address where the

code of that function lies.

But pointer to member function need to store more information:

member functions can be virtual and there is also an offset to apply to the

hidden this in case of multiple inheritance.sizeof of a pointer to a member function can even

vary depending of the class.

This is why we need to take special care when manipulating them.

Type Traits: QtPrivate::FunctionPointer

Let me introduce you to the QtPrivate::FunctionPointer type trait.

A trait is basically a helper class that gives meta data about a given type.

Another example of trait in Qt isQTypeInfo.

What we will need to know in order to implement the new syntax is information about a function pointer.

The template<typename T> struct FunctionPointer will give us information

about T via its member.

ArgumentCount: An integer representing the number of arguments of the function.Object: Exists only for pointer to member function. It is a typedef to the class of which the function is a member.Arguments: Represents the list of argument. It is a typedef to a meta-programming list.call(T &function, QObject *receiver, void **args): A static function that will call the function, applying the given parameters.

Qt still supports C++98 compiler which means we unfortunately cannot require support for variadic templates. Therefore we had to specialize our trait function for each number of arguments. We have four kinds of specializationd: normal function pointer, pointer to member function, pointer to const member function and functors. For each kind, we need to specialize for each number of arguments. We support up to six arguments. We also made a specialization using variadic template so we support arbitrary number of arguments if the compiler supports variadic templates.

The implementation of FunctionPointer lies in

qobjectdefs_impl.h.

QObject::connect

The implementation relies on a lot of template code. I am not going to explain all of it.

Here is the code of the first new overload from qobject.h:

template<typename Func1, typename Func2>staticinlineQMetaObject::Connectionconnect(consttypenameQtPrivate::FunctionPointer<Func1>::Object *sender, Func1 signal,consttypenameQtPrivate::FunctionPointer<Func2>::Object *receiver, Func2 slot,Qt::ConnectionTypetype = Qt::AutoConnection) {typedefQtPrivate::FunctionPointer<Func1> SignalType;typedefQtPrivate::FunctionPointer<Func2> SlotType;//compilation error if the arguments does not match.Q_STATIC_ASSERT_X(int(SignalType::ArgumentCount) >= int(SlotType::ArgumentCount),"The slot requires more arguments than the signal provides.");Q_STATIC_ASSERT_X((QtPrivate::CheckCompatibleArguments<typenameSignalType::Arguments,typenameSlotType::Arguments>::value),"Signal and slot arguments are not compatible.");Q_STATIC_ASSERT_X((QtPrivate::AreArgumentsCompatible<typenameSlotType::ReturnType,typenameSignalType::ReturnType>::value),"Return type of the slot is not compatible with the return type of the signal.");constint *types;/* ... Skipped initialization of types, used for QueuedConnection ...*/QtPrivate::QSlotObjectBase *slotObj = newQtPrivate::QSlotObject<Func2,typenameQtPrivate::List_Left<typenameSignalType::Arguments, SlotType::ArgumentCount>::Value,typenameSignalType::ReturnType>(slot);returnconnectImpl(sender, reinterpret_cast<void **>(&signal),receiver, reinterpret_cast<void **>(&slot), slotObj,type, types, &SignalType::Object::staticMetaObject); }

You notice in the function signature that sender and receiver

are not just QObject* as the documentation points out. They are pointers totypename FunctionPointer::Object instead.

This uses SFINAE

to make this overload only enabled for pointers to member functions

because the Object only exists in FunctionPointer if

the type is a pointer to member function.

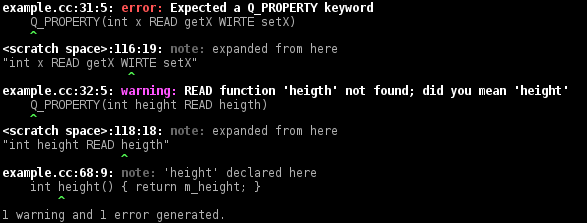

We then start with a bunch ofQ_STATIC_ASSERT.

They should generate sensible compilation error messages when the user made a mistake.

If the user did something wrong, it is important that he/she sees an error here

and not in the soup of template code in the _impl.h files.

We want to hide the underlying implementation from the user who should not need

to care about it.

That means that if you ever you see a confusing error in the implementation details,

it should be considered as a bug that should be reported.

We then allocate a QSlotObject that is going to be passed to connectImpl().

The QSlotObject is a wrapper around the slot that will help calling it. It also

knows the type of the signal arguments so it can do the proper type conversion.

We use List_Left to only pass the same number as argument as the slot, which allows connecting

a signal with many arguments to a slot with less arguments.

QObject::connectImpl is the private internal function

that will perform the connection.

It is similar to the original syntax, the difference is that instead of storing a

method index in the QObjectPrivate::Connection structure,

we store a pointer to the QSlotObjectBase.

The reason why we pass &slot as a void** is only to

be able to compare it if the type is Qt::UniqueConnection.

We also pass the &signal as a void**.

It is a pointer to the member function pointer. (Yes, a pointer to the pointer)

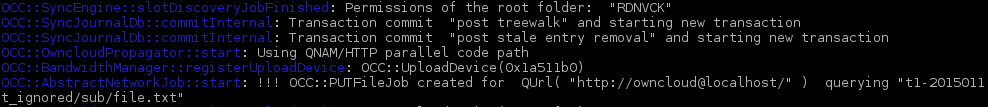

Signal Index

We need to make a relationship between the signal pointer and the signal index.

We use MOC for that. Yes, that means this new syntax

is still using the MOC and that there are no plans to get rid of it :-).

MOC will generate code in qt_static_metacall

that compares the parameter and returns the right index.connectImpl will call the qt_static_metacall function with the

pointer to the function pointer.

voidCounter::qt_static_metacall(QObject *_o, QMetaObject::Call_c, int_id, void **_a) {if (_c == QMetaObject::InvokeMetaMethod) { /* .... skipped ....*/ } elseif (_c == QMetaObject::IndexOfMethod) {int *result = reinterpret_cast<int *>(_a[0]);void **func = reinterpret_cast<void **>(_a[1]); {typedefvoid (Counter::*_t)(int );if (*reinterpret_cast<_t *>(func) == static_cast<_t>(&Counter::valueChanged)) { *result = 0; } } {typedefQString (Counter::*_t)(constQString& );if (*reinterpret_cast<_t *>(func) == static_cast<_t>(&Counter::someOtherSignal)) { *result = 1; } } {typedefvoid (Counter::*_t)();if (*reinterpret_cast<_t *>(func) == static_cast<_t>(&Counter::anotherSignal)) { *result = 2; } } } }

Once we have the signal index, we can proceed like in the other syntax.

The QSlotObjectBase

QSlotObjectBase is the object passed to connectImpl

that represents the slot.

Before showing the real code, this is what QObject::QSlotObjectBase was in Qt5 alpha:

structQSlotObjectBase {QAtomicIntref;QSlotObjectBase() : ref(1) {}virtual~QSlotObjectBase();virtualvoidcall(QObject *receiver, void **a) = 0;virtualboolcompare(void **) { returnfalse; }

};It is basically an interface that is meant to be re-implemented by template classes implementing the call and comparison of the function pointers.

It is re-implemented by one of the QSlotObject, QStaticSlotObject orQFunctorSlotObject template class.

Fake Virtual Table

The problem with that is that each instantiation of those object would need to create a virtual table which contains not only pointer to virtual functions but also lot of information we do not need such asRTTI. That would result in lot of superfluous data and relocation in the binaries.

In order to avoid that, QSlotObjectBase was changed not to be a C++ polymorphic class.

Virtual functions are emulated by hand.

classQSlotObjectBase {QAtomicIntm_ref;typedefvoid (*ImplFn)(intwhich, QSlotObjectBase* this_,QObject *receiver, void **args, bool *ret);constImplFnm_impl;protected:enumOperation { Destroy, Call, Compare };public:explicitQSlotObjectBase(ImplFnfn) : m_ref(1), m_impl(fn) {}inlineintref() Q_DECL_NOTHROW { returnm_ref.ref(); }inlinevoiddestroyIfLastRef() Q_DECL_NOTHROW {if (!m_ref.deref()) m_impl(Destroy, this, 0, 0, 0);

}inlineboolcompare(void **a) { boolret; m_impl(Compare, this, 0, a, &ret); returnret; }inlinevoidcall(QObject *r, void **a) { m_impl(Call, this, r, a, 0); }

};The m_impl is a (normal) function pointer which performs

the three operations that were previously virtual functions. The "re-implementations"

set it to their own implementation in the constructor.

Please do not go in your code and replace all your virtual functions by such a

hack because you read here it was good.

This is only done in this case because almost every call to connect

would generate a new different type (since the QSlotObject has template parameters

wich depend on signature of the signal and the slot).

Protected, Public, or Private Signals.

Signals were protected in Qt4 and before. It was a design choice as signals should be emittedby the object when its change its state. They should not be emitted from

outside the object and calling a signal on another object is almost always a bad idea.

However, with the new syntax, you need to be able take the address

of the signal from the point you make the connection.

The compiler would only let you do that if you have access to that signal.

Writing &Counter::valueChanged would generate a compiler error

if the signal was not public.

In Qt 5 we had to change signals from protected to public.

This is unfortunate since this mean anyone can emit the signals.

We found no way around it. We tried a trick with the emit keyword. We tried returning a special value.

But nothing worked.

I believe that the advantages of the new syntax overcome the problem that signals are now public.

Sometimes it is even desirable to have the signal private. This is the case for example inQAbstractItemModel, where otherwise, developers tend to emit signal

from the derived class which is not what the API wants.

There used to be a pre-processor trick that made signals private

but it broke the new connection syntax.

A new hack has been introduced.QPrivateSignal is a dummy (empty) struct declared private in the Q_OBJECT

macro. It can be used as the last parameter of the signal. Because it is private, only the object

has the right to construct it for calling the signal.

MOC will ignore the QPrivateSignal last argument while generating signature information.

See

qabstractitemmodel.h for an example.

More Template Code

The rest of the code is in qobjectdefs_impl.h and qobject_impl.h. It is mostly standard dull template code.

I will not go into much more details in this article, but I will just go over few items that are worth mentioning.

Meta-Programming List

As pointed out earlier, FunctionPointer::Arguments is a list

of the arguments. The code needs to operate on that list:

iterate over each element, take only a part of it or select a given item.

That is why there isQtPrivate::List that can represent a list of types. Some helpers to operate on it areQtPrivate::List_Select andQtPrivate::List_Left, which give the N-th element in the list and a sub-list containing

the N first elements.

The implementation of List is different for compilers that support variadic templates and compilers that do not.

With variadic templates, it is atemplate<typename... T> struct List;. The list of arguments is just encapsulated

in the template parameters.

For example: the type of a list containing the arguments (int, QString, QObject*) would simply be:

List<int, QString, QObject *>

Without variadic template, it is a LISP-style list: template<typename Head, typename Tail > struct List;

where Tail can be either another List or void for the end of the list.

The same example as before would be:

List<int, List<QString, List<QObject *, void> > >

ApplyReturnValue Trick

In the function FunctionPointer::call, the args[0] is meant to receive the return value of the slot.

If the signal returns a value, it is a pointer to an object of the return type of

the signal, else, it is 0.

If the slot returns a value, we need to copy it in arg[0]. If it returns void, we do nothing.

The problem is that it is not syntaxically correct to use the

return value of a function that returns void.

Should I have duplicated the already huge amount of code duplication: once for the void

return type and the other for the non-void?

No, thanks to the comma operator.

In C++ you can do something like that:

functionThatReturnsVoid(), somethingElse();

You could have replaced the comma by a semicolon and everything would have been fine.

Where it becomes interesting is when you call it with something that is not void:

functionThatReturnsInt(), somethingElse();

There, the comma will actually call an operator that you even can overload. It is what we do in qobjectdefs_impl.h

template<typename T>structApplyReturnValue {void *data;ApplyReturnValue(void *data_) : data(data_) {}

};template<typename T, typename U>voidoperator,(const T &value, constApplyReturnValue<U> &container) {if (container.data)

*reinterpret_cast<U*>(container.data) = value;

}template<typename T>voidoperator,(T, constApplyReturnValue<void> &) {}ApplyReturnValue is just a wrapper around a void*. Then it can be used

in each helper. This is for example the case of a functor without arguments:

staticvoidcall(Function &f, void *, void **arg) {f(), ApplyReturnValue<SignalReturnType>(arg[0]);

}This code is inlined, so it will not cost anything at run-time.

Conclusion

This is it for this blog post. There is still a lot to talk about (I have not even mentioned QueuedConnection or thread safety yet), but I hope you found this interresting and that you learned here something that might help you as a programmer.